Introduction

This review covers “Vector Databases: From Embeddings to Applications – AI-Powered Course”, a training product whose description states it “teaches how data vectorization and vector databases enable context-based search over keyword matching, multimodal data search, enhance recommendation systems, and power LLMs.” The goal of this review is to provide a thorough, objective assessment to help potential buyers decide if the course fits their needs, highlighting strengths, weaknesses, practical value, and likely limitations.

Product Overview

Product Title: Vector Databases: From Embeddings to Applications – AI-Powered Course

Manufacturer / Provider: Not specified in the supplied product data — the listing does not identify an instructor, institution, or platform.

Product Category: Online technical course / e-learning (specialized in vector representations, vector databases, and applied retrieval/LLM workflows).

Intended Use: To teach practitioners and decision-makers how embeddings and vector databases enable semantic search, multimodal retrieval, recommender improvements, and LLM augmentation (retrieval-augmented generation).

Appearance, Materials & Aesthetic

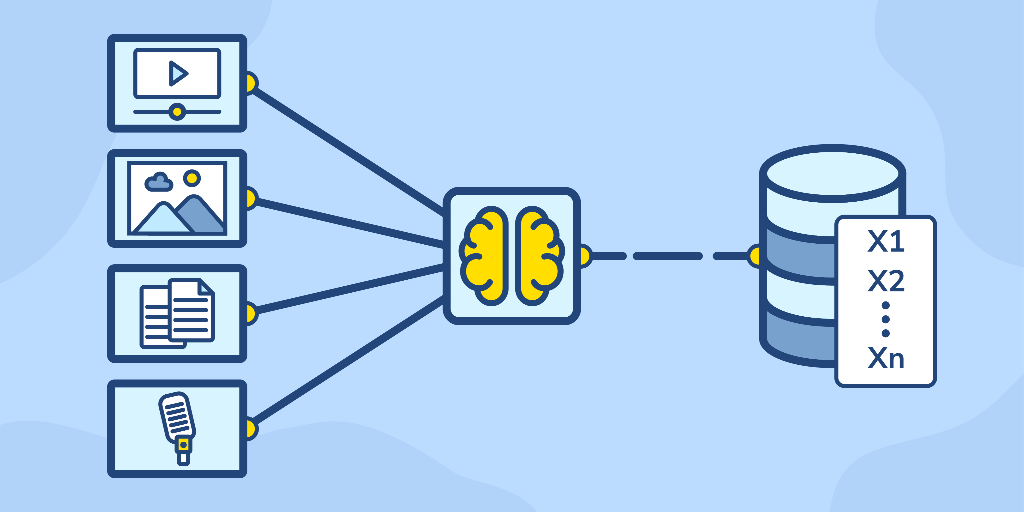

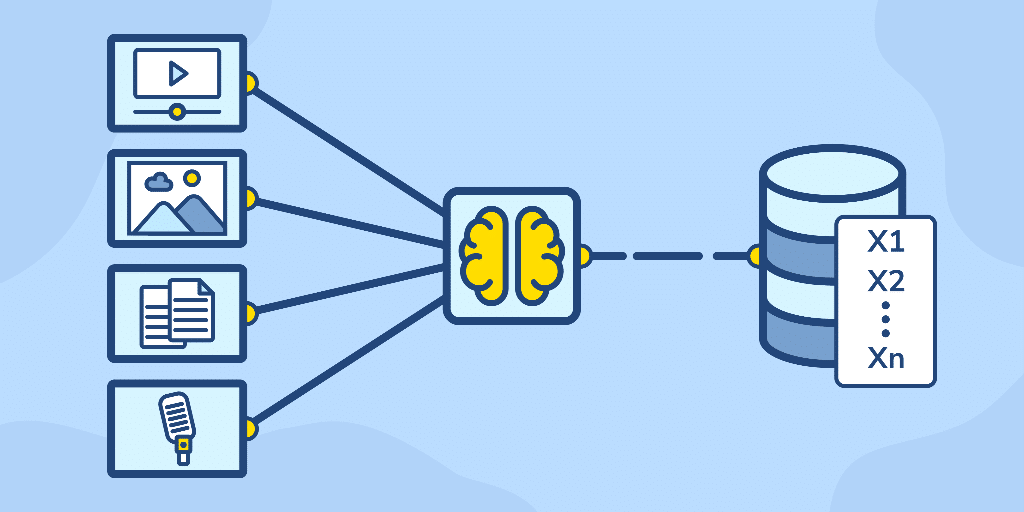

The product is a digital course; there is no physical appearance. Based on the description and conventions for similar courses, the course likely includes a mix of:

- Video lectures or screencasts with instructor narration.

- Slide decks or PDFs summarizing concepts.

- Code notebooks (Jupyter/Colab) with examples for vectorization, indexing, and retrieval.

- Hands-on demo apps (small web UIs) to illustrate semantic search or multimodal retrieval.

- Reference links, recommended reading, and possibly a GitHub repo with code.

Aesthetic and UX: Expect a standard modern e-learning aesthetic — clean slides, code-focused demos, and CLI/IDE screenshots. Unique design elements that benefit learners typically include interactive notebooks, step-by-step project builds, and downloadable starter templates. The exact visual polish and layout depend on the unknown provider.

Key Features / Specifications

- Core Focus: Embeddings (vectorization) and vector databases for semantic / context-based search versus keyword matching.

- Applications Covered: Multimodal search (text + images), recommendation system enhancements, and powering LLMs through retrieval-augmented generation (RAG).

- Hands-on Component: Presumed practical labs or code examples for producing embeddings, building indexes, and executing similarity search queries.

- Conceptual Coverage: Vector representation fundamentals, similarity metrics (cosine/Euclidean), approximate nearest neighbor (ANN) search concepts, and trade-offs between accuracy and speed.

- Tools & Ecosystem: While not specified, courses of this scope commonly demonstrate tools such as FAISS, Annoy, Milvus, Pinecone, Weaviate, or Qdrant, and embedding providers like OpenAI/Hugging Face/sentence-transformers.

- Intended Audience: Data scientists, ML engineers, software engineers, product managers, and researchers interested in applying semantic search and LLM augmentation.

- Delivery Format: Online/digital — likely self-paced with downloadable code and resources (assumption based on standard practice).

Experience Using the Course (Practical Scenarios)

1) Beginner / Data Scientist Learning Embeddings

Strengths:

- Good introduction to why embeddings matter: explaining semantic similarity vs keyword matching is essential and the course description promises that focus.

- When paired with code notebooks, learners can see immediate results (embedding vectors, similarity scores), which reinforces concepts.

Limitations:

- If the course assumes prior knowledge of Python, linear algebra, or ML concepts, absolute beginners may need supplementary materials.

- Without clear indication of exercises or quizzes, it’s hard to judge how well beginners can assess mastery.

2) Engineer Building a Semantic Search Feature

Strengths:

- Practical demos of vector indexing and querying are invaluable for a production-minded engineer. The course promises focused coverage on context-based search.

- Clarification of ANN algorithms, index types, and metric trade-offs can accelerate implementation decisions.

Limitations:

- Production deployments require attention to scale, latency, persistence, monitoring, and cost. If the course does not include a deep dive into operational concerns (sharding, replication, consistency), engineers will need follow-up resources.

3) Data Scientist / ML Engineer Implementing Recommendation Systems

Strengths:

- Vector embeddings can substantially improve recommendations by capturing latent similarity. The course’s stated scope aligns with this use-case.

- Examples that show how to combine collaborative signals and content embeddings are especially useful.

Limitations:

- Recommendation systems often require A/B testing, feature blending, and online learning; a course focused on embeddings may not fully cover production experimentation and evaluation strategies.

4) Product Manager or Technical Lead Evaluating Vector DB Adoption

Strengths:

- The course provides the conceptual grounding to understand benefits of vector-based search and where to apply it versus legacy keyword systems.

- High-level comparisons between semantic and keyword approaches help inform product decisions and ROI discussions.

Limitations:

- Product stakeholders will benefit from explicit vendor comparisons, cost models, and case studies — if these are missing, decision-makers will still need vendor-specific research.

5) Researcher Working with Multimodal Data

Strengths:

- If the course includes multimodal examples (text + image embeddings), it can jumpstart prototyping of cross-modal retrieval systems.

Limitations:

- Multimodal workflows can be complex (alignment of embedding spaces, joint training); a general course may only scratch the surface unless it explicitly focuses on multimodal modeling and advanced architectures.

Pros

- Relevant, high-demand topic: Vector databases and embeddings are central to modern search, recommendation, and LLM pipelines.

- Application-focused: The course promises practical use-cases (semantic search, multimodal search, recommender improvements, LLM augmentation), which makes it useful beyond theory.

- Bridges concept to implementation: When paired with code examples and demos, the course can shorten the path from idea to working prototype.

- Cross-role value: Useful for engineers, data scientists, and product people who want a common vocabulary around vector-based systems.

Cons / Limitations

- Provider/instructor not specified: The product data does not identify the author, organization, or platform; instructor quality and reputation matter for technical topics.

- Scope ambiguity: The description is high level — it doesn’t list tools, depth, prerequisites, or duration. Buyers need clarity on what is included (labs, code, datasets).

- Potential gaps for production topics: Operational concerns (scaling, monitoring, cost, vendor lock-in) may not be fully covered unless the course explicitly includes them.

- Advanced research topics: If you need deep theoretical coverage (metric learning, embedding alignment, trainable retrieval models), this course may be introductory to intermediate rather than research-grade.

Conclusion

“Vector Databases: From Embeddings to Applications – AI-Powered Course” addresses a timely and valuable subject: how embeddings and vector databases transform search, recommendation, multimodal retrieval, and LLM workflows. The described focus on context-based search and applied use cases makes it attractive for engineers and data practitioners wanting to prototype semantic features and retrieval-augmented systems.

Strengths lie in its practical orientation and the clear relevance of the topic. However, the lack of provider/instructor information and concrete syllabus details in the supplied product data means buyers should verify the course’s depth, tooling coverage, prerequisite knowledge, and whether it includes hands-on labs, code repositories, and production-focused guidance.

Recommended next steps for potential buyers:

- Request a syllabus or module list and sample lesson to judge depth and tooling (FAISS, Milvus, Pinecone, Weaviate, Qdrant, Hugging Face, OpenAI, sentence-transformers, etc.).

- Check for included artifacts: GitHub repo, Jupyter/Colab notebooks, sample datasets, and demo apps.

- Confirm intended audience and prerequisites so you’re not surprised by assumed background knowledge.

Overall impression: promising and practical for applied learning about embeddings and vector DBs, but confirm specifics before purchase to ensure it matches your needs and technical level.

Leave a Reply