Introduction

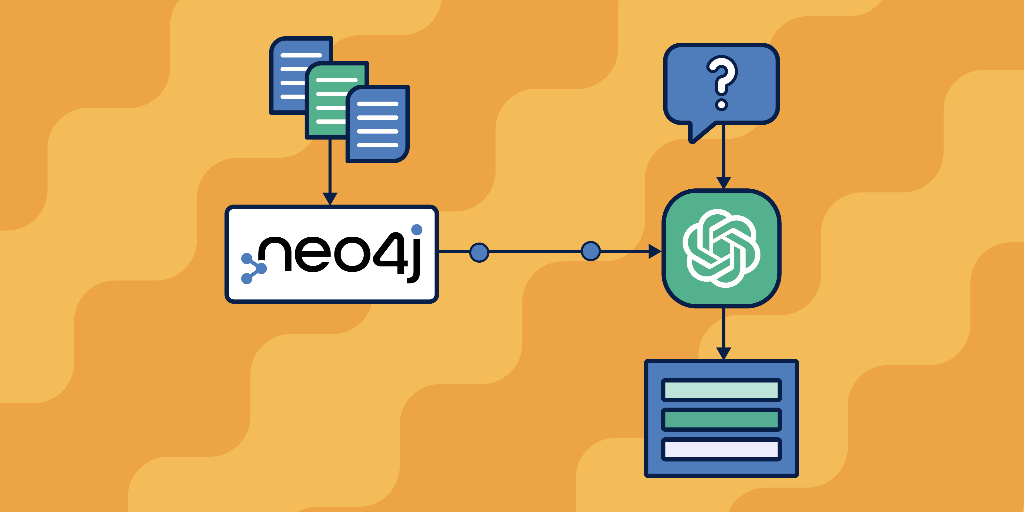

This review evaluates “Master Knowledge Graph Retrieval-Augmented Generation with Neo4j – AI-Powered Course” (referred to hereafter as the GraphRAG course). The course promises a focused, hands-on path to combining knowledge graphs (Neo4j) with Retrieval-Augmented Generation (RAG) techniques and OpenAI ChatGPT to improve response accuracy and reduce hallucinations. Below I summarize the course offering, assess its instructional design and practical utility, and provide recommendations for prospective learners.

Product Overview

Product title: Master Knowledge Graph Retrieval-Augmented Generation with Neo4j – AI-Powered Course.

Manufacturer / Provider: Not explicitly stated in the provided description. The course centers on Neo4j (the graph database) and OpenAI ChatGPT integration and is most relevant to organizations or instructors who teach practical AI engineering and knowledge-graph topics.

Product category: Online technical course / training module in applied AI, specifically on knowledge graphs, RAG (Retrieval-Augmented Generation), and LLM integration.

Intended use: To teach practitioners how to use a knowledge graph (Neo4j) alongside OpenAI ChatGPT to build RAG applications that reduce hallucinations and improve response accuracy. Target learners include software engineers, data scientists, ML engineers, and solution architects building QA systems, chatbots, or domain-specific assistants.

Design, Format, and Materials

As a digital course, the “appearance” is virtual rather than physical. Typical materials you can expect (implied by the course focus) include:

- Video lectures and slide decks explaining core concepts (knowledge graphs, RAG, embeddings, Neo4j queries).

- Hands-on code examples and notebooks (Python/JavaScript examples) demonstrating integration with Neo4j and OpenAI APIs.

- Sample datasets and schema examples for building a knowledge graph (entities, relationships, properties).

- Instructional walkthroughs for environment setup (Neo4j sandbox or Docker images), API keys, and tooling.

- Practical lab exercises or project assignments to implement an end-to-end GraphRAG pipeline.

Unique design elements likely emphasize practical, end-to-end implementation: building a curated knowledge graph, using graph queries to retrieve relevant context, converting graph results to prompts or context for ChatGPT, and strategies to evaluate and mitigate hallucination.

Key Features & Specifications

- Core topic coverage: Knowledge graphs fundamentals and Neo4j usage (modeling, Cypher queries), RAG principles, and integration with OpenAI ChatGPT.

- Hands-on implementation: Practical examples that show how to chain graph retrieval with LLM prompting to produce more accurate responses.

- Focus on hallucination reduction: Techniques and patterns to ground generative responses using structured graph data.

- Tooling & stack: Neo4j as the graph database and OpenAI (ChatGPT) as the LLM; likely includes code for embeddings, similarity search, and prompt engineering.

- Use-case orientation: Demonstrations tailored toward QA systems, domain assistants, or RAG-enhanced conversational agents.

- Prerequisites (implied): Basic familiarity with programming (Python or JS), databases, and LLM concepts; access to Neo4j and an OpenAI API key.

Experience Using the Course (Practical Scenarios)

Getting started / Setup

The onboarding experience is likely straightforward for engineers: set up a Neo4j instance (local, Docker, or cloud sandbox), obtain an OpenAI API key, and clone starter repositories. Expect stepwise guidance for environment setup and credentials management. Beginners without database or API experience will need some extra time or pre-course reading.

Hands-on labs / Building a GraphRAG pipeline

The core labs typically walk you through:

- Modeling a knowledge graph (nodes, relationships, properties) for your domain.

- Ingesting structured and unstructured data into Neo4j and attaching metadata for retrieval.

- Generating embeddings of graph nodes or documents (if covered), and performing similarity searches.

- Designing retrieval logic (Cypher queries or APIs) to find relevant graph context for a given user query.

- Formatting retrieved context into prompts for ChatGPT and evaluating responses for faithfulness and hallucinations.

This hands-on approach helps learners understand how graph structure improves retrieval relevance compared to naive vector-only RAG systems.

Real-world scenarios

Use-case examples where the course is especially valuable:

- Domain-specific virtual assistants (medical, legal, enterprise knowledge): using curated facts in graph form to ground LLM responses.

- Product or customer support bots: answering queries by traversing product knowledge graphs and returning precise, source-linked answers.

- Research assistants: extracting relationships and facts from literature and using graph context to generate accurate summaries.

- Hybrid search experiences: combining structured graph queries with generative summarization for exploratory interfaces.

Limitations experienced in practice

The course will likely highlight best practices, but real deployments expose additional challenges:

- Graph curation overhead — building and maintaining a high-quality knowledge graph requires domain expertise and ongoing ETL processes.

- Operational complexity — combining Neo4j, embedding storage, and LLM calls introduces latency, cost (OpenAI usage), and engineering overhead.

- Scalability & consistency — ensuring retrieval scales and remains accurate when the graph grows or evolves.

- Evaluation — measuring “reduced hallucinations” requires custom test sets and monitoring strategies not covered in depth by short courses.

Pros

- Focused, practical subject: Directly addresses a high-value area — combining graphs with RAG to improve answer accuracy.

- Hands-on integration: Emphasizes end-to-end implementation (Neo4j + OpenAI) rather than only theory.

- Actionable techniques to reduce hallucination: Practical patterns to ground LLMs in structured facts and provenance.

- Applicable to multiple domains: Techniques generalize to support chatbots, QA, enterprise knowledge, and research tools.

- Skills transfer: Teaches Neo4j and RAG patterns that are useful beyond the specific course project.

Cons

- No explicit provider details in the description — potential learners may want clarity on instructor credentials and support.

- Prerequisite knowledge implied — beginners may struggle without prior familiarity with databases, graph concepts, or APIs.

- Operational topics may be lightly covered — production-readiness (scaling, monitoring, cost optimization) often requires deeper treatment.

- Potential external costs — Neo4j instances, compute for embeddings, and OpenAI API usage add real-world expenses not included in course fees.

- Variation in materials — depending on the course’s depth, students may need supplementary resources for advanced graph engineering or LLM evaluation techniques.

Conclusion

Overall impression: “Master Knowledge Graph Retrieval-Augmented Generation with Neo4j” targets a timely and practical intersection of technologies. Based on the course description, it appears well-suited for intermediate practitioners—software engineers, ML engineers, and data scientists—who want to build RAG systems that leverage graph structure to ground LLM outputs and reduce hallucination. The strengths are its practical, hands-on orientation and direct focus on Neo4j + OpenAI integration. Areas for potential improvement include clearer disclosure of instructor credentials, more support for beginners, and deeper coverage of productionization concerns (scaling, cost, monitoring).

Recommendation: If you are building domain-specific assistants or enterprise QA systems and already have basic familiarity with databases and APIs, this course is likely a strong, directly applicable investment. If you are new to graphs or LLMs, consider supplementing the course with introductory Neo4j or LLM fundamentals before diving in.

Leave a Reply