Edge AI: Bringing Intelligence to IoT Devices – The Ultimate Guide to Real-Time Intelligence

Welcome to Edge AI, where artificial intelligence meets the physical world at the point of data creation. This isn’t just another tech trend; it’s a fundamental shift that’s transforming a $20.78 billion market in 2024 into a projected $269.82 billion industry by 2032. In this comprehensive guide, you’ll discover how Edge AI is revolutionizing everything from manufacturing floors to medical devices, and more importantly, how you can harness this technology to build the next generation of intelligent applications.

Table of Contents

- The Edge AI Revolution: Why Now?

- What is Edge AI? Intelligence Without the Cloud

- Edge AI vs Cloud AI: The Ultimate Showdown

- Five Game-Changing Benefits of Edge AI

- Real-World Edge AI Case Studies

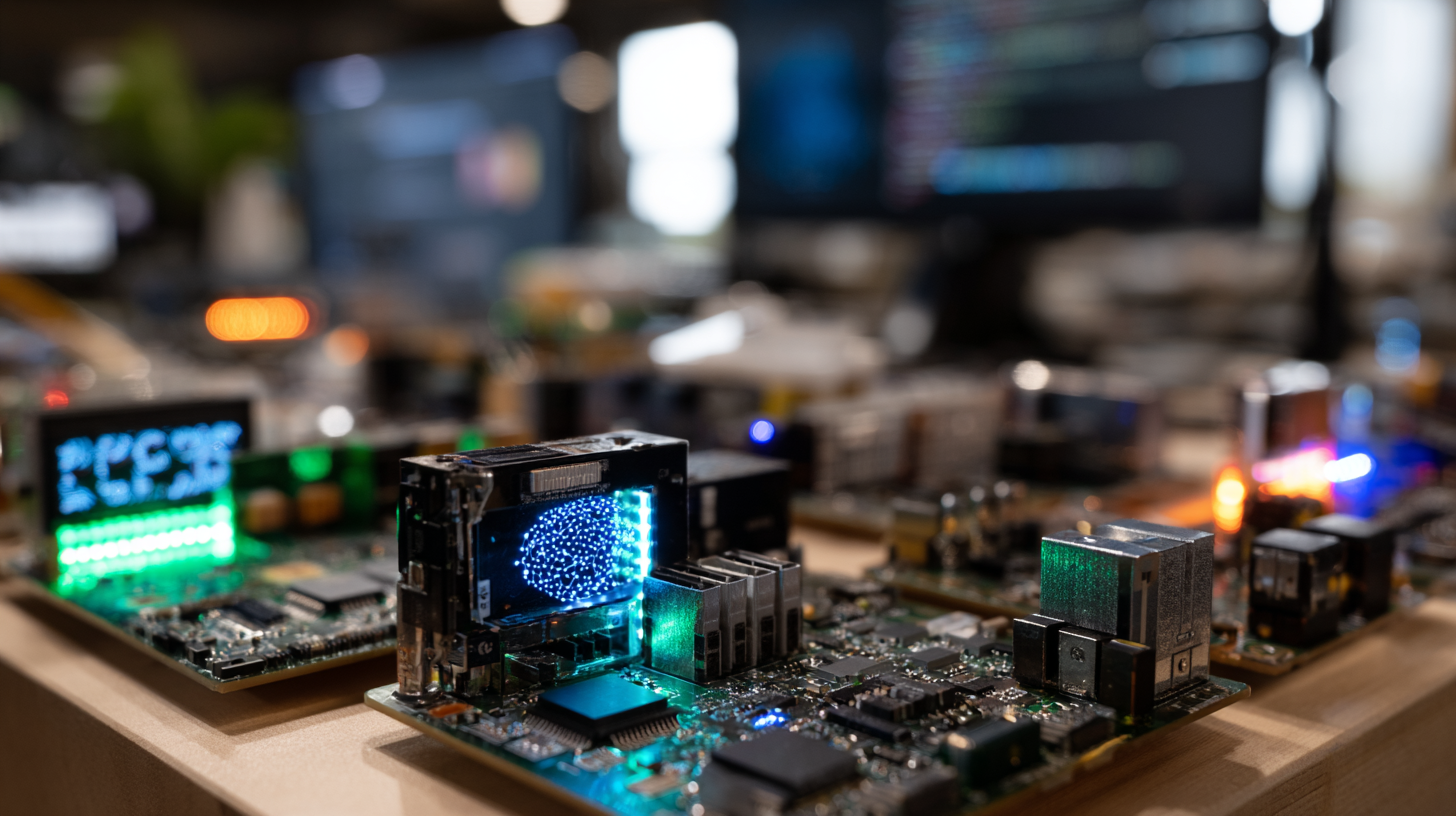

- Essential Edge AI Hardware: Your Toolkit

- Software Frameworks: From Code to Device

- Building Your First Edge AI Project

- The Future of Edge Intelligence

- Practical Implementation Guide

- Tools & Resources Deep Dive

- Career Opportunities in Edge AI

- Comprehensive FAQ

The Edge AI Revolution: Why Now?

The perfect storm of technological advancement has created an unprecedented opportunity for edge computing. Enterprise data processing has shifted dramatically—from just 10% at the edge in 2018 to a projected 75% by 2025. This massive transformation isn’t happening by accident.

Three critical factors are driving this revolution. First, the exponential growth of IoT devices has created more data than cloud infrastructure can economically process. Second, applications demanding real-time responses—like autonomous vehicles and industrial automation—simply can’t tolerate cloud latency. Third, privacy regulations and security concerns are pushing organizations to keep sensitive data local.

The semiconductor industry has responded with specialized AI chips designed for edge deployment. The edge AI accelerator market alone is exploding with a 30.83% compound annual growth rate, enabling powerful AI processing in devices no larger than a smartphone.

But this isn’t just about hardware capabilities. The real breakthrough comes from advances in AI model optimization. Techniques like quantization and pruning can compress neural networks to run efficiently on resource-constrained devices while maintaining accuracy levels that rival cloud-based systems.

What is Edge AI? Intelligence Without the Cloud

Edge AI represents the deployment of artificial intelligence algorithms directly on local devices—smartphones, cameras, sensors, industrial equipment—rather than in distant cloud data centers. Think of it as giving every device its own brain instead of relying on a shared, remote intelligence.

Edge AI enables data processing within milliseconds, providing real-time feedback directly on the device. A smart security camera using Edge AI can instantly identify a person versus a package without sending video footage to the cloud. An industrial sensor can detect equipment anomalies and trigger maintenance alerts in real-time, preventing costly downtime.

The core components of an Edge AI system include data collection sensors, local processing hardware with AI acceleration, pre-trained machine learning models optimized for edge deployment, and edge runtime software that manages inference operations. Modern edge devices can perform intensive AI calculations locally that were previously only possible in cloud environments.

Unlike traditional IoT systems that collect data and send it elsewhere for analysis, Edge AI closes the loop locally. This creates a new paradigm where devices become autonomous decision-makers rather than simple data collectors.

Edge AI vs Cloud AI: The Ultimate Showdown

Understanding when to deploy Edge AI versus Cloud AI is crucial for system architects and developers. Each approach offers distinct advantages depending on your application requirements.

Bandwidth and Connectivity: Edge AI dramatically reduces bandwidth requirements by processing data locally and only transmitting relevant insights or summaries to the cloud. Cloud AI requires constant data transmission, which can overwhelm network infrastructure and incur significant costs in high-volume applications.

Privacy and Security: Edge AI keeps sensitive data on the device, reducing privacy risks and regulatory compliance challenges. Personal health data, financial information, and proprietary industrial processes can be analyzed without ever leaving the local environment.

Computational Power: Cloud AI offers virtually unlimited computational resources for training complex models and processing massive datasets. Edge AI is constrained by device hardware but optimized for efficient inference operations.

Model Complexity: Cloud environments can run sophisticated, large-scale models with billions of parameters. Edge devices typically run compressed, optimized models designed for specific tasks and hardware constraints.

Operational Costs: Edge AI reduces ongoing cloud computing and data transmission costs but requires upfront hardware investment. Cloud AI spreads costs over time but can become expensive at scale due to data transfer and processing fees.

The most effective deployments often combine both approaches, using edge devices for real-time processing and cloud systems for model training, updates, and complex analytics.

Five Game-Changing Benefits of Edge AI

1. Lightning-Fast Response Times

Real-time applications like autonomous vehicles require millisecond response times that are only achievable through local processing. When a self-driving car’s camera detects an obstacle, there’s no time for a round-trip to the cloud. Edge AI enables immediate decision-making that can literally save lives.

2. Bandwidth Liberation

Instead of streaming gigabytes of raw sensor data to the cloud, Edge AI processes information locally and transmits only actionable insights. A factory with hundreds of sensors can reduce network traffic by 90% while gaining more valuable intelligence about equipment performance and predictive maintenance needs.

3. Fort Knox Privacy

Processing data locally enhances privacy by keeping sensitive information on the device rather than transmitting it to external servers. Healthcare applications can analyze patient data for immediate diagnosis without compromising medical privacy. Smart home devices can provide personalized experiences without sending intimate details about daily routines to corporate servers.

4. Offline Independence

Edge AI systems continue operating even when internet connectivity is disrupted. Remote monitoring stations, offshore platforms, and mobile robots can maintain critical AI functionality without depending on network availability. This resilience is essential for mission-critical applications where downtime isn’t acceptable.

5. Cost-Effective Scale

While cloud AI costs scale linearly with usage, Edge AI offers predictable, one-time hardware costs. Organizations processing massive amounts of data find Edge AI more economical at scale, especially when combined with reduced bandwidth costs and elimination of cloud storage fees.

Real-World Edge AI Case Studies

Smart Manufacturing: Bosch’s Predictive Revolution

Bosch revolutionized their manufacturing operations by deploying Edge AI sensors across production lines. These intelligent sensors continuously monitor machine vibrations, temperature, and acoustic signatures to predict equipment failures before they occur. The system reduced unplanned downtime by 25% and maintenance costs by 30% by enabling predictive rather than reactive maintenance strategies.

Retail Intelligence: Amazon Go’s Checkout-Free Experience

Amazon Go stores use hundreds of Edge AI cameras and sensors to track customer behavior and automatically charge for items taken from shelves. The system combines computer vision, sensor fusion, and deep learning to create a seamless shopping experience without traditional checkout processes. Each camera runs Edge AI models for real-time person tracking and item recognition, processing thousands of interactions simultaneously.

Healthcare Monitoring: Continuous Patient Intelligence

Modern wearable devices use Edge AI to continuously monitor vital signs and detect health anomalies in real-time. These systems can identify irregular heart rhythms, predict diabetic episodes, and alert medical professionals to emergency situations without requiring constant cloud connectivity. The local processing ensures patient privacy while enabling life-saving interventions.

Agricultural Precision: John Deere’s Smart Farming

John Deere’s See & Spray technology uses Edge AI cameras mounted on agricultural equipment to identify individual weeds in crop fields. The system applies herbicide only to weeds, reducing chemical usage by up to 77% while improving crop yields. This precision agriculture approach requires real-time processing that’s only possible with Edge AI capabilities.

Autonomous Navigation: Tesla’s Edge Intelligence

Tesla vehicles process multiple camera feeds, radar, and sensor data using onboard Edge AI systems to enable autonomous driving capabilities. The cars make thousands of driving decisions per second using local neural networks, with cloud connectivity reserved for map updates and model improvements rather than real-time operation.

Essential Edge AI Hardware: Your Toolkit

Selecting the right hardware is crucial for Edge AI success. Different applications require different combinations of processing power, energy efficiency, and cost optimization.

NVIDIA Jetson Series: The Powerhouse Platform

The NVIDIA Jetson family offers the most comprehensive Edge AI development platform available today. The Jetson Nano provides 472 GFLOPS of AI performance in a compact form factor, ideal for robotics, smart cameras, and IoT gateways. The more powerful Jetson AGX Orin delivers up to 275 TOPS for demanding applications like autonomous vehicles and industrial automation.

Key advantages include full Linux support, extensive software ecosystem, and compatibility with popular AI frameworks including TensorFlow, PyTorch, and ONNX. The integrated GPU architecture enables both training and inference capabilities, making Jetson ideal for applications requiring model fine-tuning at the edge.

Google Coral: Speed and Efficiency Champion

Google’s Coral Dev Board features a custom Edge TPU delivering 4 TOPS of performance at just 2 watts power consumption. This exceptional efficiency makes Coral ideal for battery-powered applications and high-density deployments where thermal management is critical.

The Coral platform excels at TensorFlow Lite model inference and includes pre-optimized models for common computer vision tasks. The USB Accelerator variant allows easy integration with existing systems like Raspberry Pi, providing instant AI acceleration without hardware redesign.

Raspberry Pi: The Versatile Foundation

While not AI-native, the Raspberry Pi ecosystem’s flexibility and affordability make it an excellent Edge AI platform when combined with AI accelerators. The Raspberry Pi 4 paired with a Google Coral USB Accelerator provides a cost-effective solution for Edge AI development and prototyping.

The Pi’s extensive GPIO capabilities, camera interfaces, and massive software ecosystem enable rapid prototyping and deployment of IoT applications with AI capabilities. Educational institutions and hobbyists particularly appreciate the low barrier to entry and extensive documentation.

Intel Neural Compute Stick 2: Plug-and-Play AI

Intel’s Neural Compute Stick 2 offers a USB 3.0 solution for adding AI inference capabilities to any computer or single-board computer. The stick provides hardware acceleration for computer vision models optimized with Intel’s OpenVINO toolkit.

This approach allows existing systems to gain AI capabilities without hardware replacement, making it ideal for retrofitting legacy equipment with intelligent monitoring and analysis capabilities.

Software Frameworks: From Code to Device

The software ecosystem surrounding Edge AI has matured rapidly, offering developers multiple pathways from model development to deployment.

TensorFlow Lite: The Industry Standard

TensorFlow Lite provides optimized runtime for mobile and embedded devices with aggressive optimization features including quantization and delegate-based acceleration. The framework includes model conversion tools that transform full TensorFlow models into efficient edge-ready formats.

Recent updates include improved GPU support, expanded hardware delegate ecosystem, and enhanced model optimization tools. Google’s new AI Edge Torch creates a direct path from PyTorch models to TensorFlow Lite runtime, expanding framework compatibility.

PyTorch Mobile: Research to Production

PyTorch Mobile offers seamless deployment from research environments to mobile devices, maintaining the framework’s dynamic computation graph advantages during development. The platform particularly appeals to research teams and organizations prioritizing rapid iteration and experimentation.

Key advantages include simplified debugging, runtime model modification capabilities, and tight integration with PyTorch’s ecosystem of tools and libraries. However, models typically require more optimization work compared to TensorFlow Lite’s automated conversion pipeline.

Intel OpenVINO: Hardware-Optimized Performance

OpenVINO 2025 introduces 50% lower inference latency on Intel CPUs compared to previous versions, with native quantization support for newer neural network architectures. The toolkit particularly excels at optimizing models for Intel hardware including CPUs, integrated GPUs, and specialized VPUs.

OpenVINO’s strength lies in its comprehensive optimization pipeline that analyzes models and automatically applies hardware-specific optimizations. The platform supports models from multiple frameworks and provides detailed performance profiling tools.

Edge Impulse: End-to-End TinyML Platform

Edge Impulse offers a complete development platform for TinyML applications, targeting ultra-low-power devices with kilobytes of memory. The platform includes data collection tools, automated feature engineering, model training, and deployment pipelines specifically designed for resource-constrained environments.

This approach democratizes Edge AI development by abstracting complex optimization techniques behind user-friendly interfaces, enabling domain experts to build AI applications without deep machine learning expertise.

Building Your First Edge AI Project

Let’s walk through building a practical Edge AI application: a smart security camera that can distinguish between people, vehicles, and animals without cloud connectivity.

Hardware Requirements

- Raspberry Pi 4 (4GB RAM recommended)

- Raspberry Pi Camera Module v2 or USB webcam

- Google Coral USB Accelerator for AI acceleration

- MicroSD card (32GB minimum)

- Power supply and case

Software Setup

Begin by installing Raspberry Pi OS and updating the system packages. Install the Coral Edge TPU runtime and Python libraries following Google’s official documentation. Download pre-trained MobileNet SSD models optimized for Edge TPU from the Coral model zoo.

Implementation Steps

1. Camera Interface Setup: Configure the camera module and test basic video capture functionality using Python’s OpenCV library.

2. Model Integration: Load the pre-trained object detection model and initialize the Edge TPU runtime for hardware acceleration.

3. Real-time Processing: Implement a video processing loop that captures frames, runs inference, and overlays detection results on the output video.

4. Alert Logic: Add conditional logic to trigger alerts when specific objects are detected, including timestamp logging and optional image capture.

5. Performance Optimization: Fine-tune inference parameters, frame rates, and resolution settings to balance accuracy with real-time performance requirements.

Testing and Validation

Test the system with various lighting conditions, object sizes, and movement patterns. Measure inference times, power consumption, and accuracy metrics to validate performance meets application requirements.

The Future of Edge Intelligence

Edge AI is rapidly evolving across multiple technological fronts, creating new possibilities for intelligent applications.

TinyML: AI on a Grain of Rice

The TinyML movement is pushing AI capabilities into microcontrollers with kilobytes of memory and milliwatts of power consumption. These ultra-low-power implementations enable always-on AI in battery-powered sensors, wearable devices, and IoT endpoints where traditional edge computing hardware would be impractical.

Applications include voice wake-word detection, gesture recognition, and predictive maintenance sensors that operate for years on battery power. The constraint-driven optimization required for TinyML is advancing neural network efficiency techniques that benefit the entire Edge AI ecosystem.

Federated Learning: Training Without Data Sharing

Federated learning represents a paradigm shift in how AI models are trained, allowing devices to collaboratively improve models without sharing raw data. Edge devices train on local data and share only model updates, preserving privacy while enabling collective intelligence.

This approach is particularly valuable in healthcare, finance, and other privacy-sensitive domains where data sharing is restricted but collective learning provides significant benefits. As federated learning techniques mature, Edge AI devices will become self-improving systems that adapt to local conditions while benefiting from global knowledge.

5G and Edge Synergy: Distributed Intelligence Networks

The deployment of 5G networks enables new architectural possibilities for Edge AI systems. Ultra-low latency 5G connections allow real-time coordination between edge devices, creating distributed intelligence networks that exceed the capabilities of individual devices.

Applications include cooperative autonomous vehicle systems, smart city coordination, and industrial automation networks where devices share real-time insights to optimize collective performance. This evolution transforms Edge AI from individual device intelligence to networked artificial intelligence.

Practical Implementation Guide

Successfully deploying Edge AI requires careful consideration of technical, operational, and business factors.

Model Selection and Optimization

Choose models appropriate for your hardware constraints and accuracy requirements. Start with pre-trained models from established repositories before considering custom model development. Apply optimization techniques including quantization, pruning, and knowledge distillation to reduce model size and improve inference speed.

Performance Benchmarking

Establish baseline performance metrics including inference latency, power consumption, accuracy, and thermal characteristics. Test across expected operating conditions including temperature variations, lighting changes, and input data diversity.

Deployment Strategy

Plan for model updates, configuration management, and remote monitoring capabilities. Edge devices often operate in challenging environments where physical access is limited, making robust remote management essential.

Security Considerations

Implement security measures including model encryption, secure boot processes, and intrusion detection. Edge devices are often deployed in accessible locations, making them vulnerable to physical attacks that could compromise AI models or sensitive data.

Tools & Resources Deep Dive

Development Platforms Comparison

NVIDIA Jetson Ecosystem: Offers the most comprehensive development experience with extensive documentation, sample projects, and community support. The JetPack SDK provides optimized libraries for computer vision, speech processing, and robotics applications. Best suited for applications requiring high performance and full Linux compatibility.

Google Coral Platform: Provides streamlined development focused on TensorFlow Lite models with exceptional power efficiency. The platform includes cloud-based training tools and automated model optimization pipelines. Ideal for battery-powered applications and high-density deployments.

Raspberry Pi + Accelerators: Delivers maximum flexibility and community support with the largest ecosystem of tutorials, projects, and accessories. This approach allows mixing and matching accelerators based on specific requirements while maintaining cost-effectiveness.

Framework Integration Workflows

Most successful Edge AI projects use hybrid approaches combining multiple frameworks. Train models in full-featured environments like TensorFlow or PyTorch, convert to optimized formats using framework-specific tools, and deploy using runtime engines optimized for target hardware.

Consider model versioning, A/B testing capabilities, and rollback procedures when designing deployment workflows. Edge devices often operate in mission-critical applications where model updates must be thoroughly validated before deployment.

Career Opportunities in Edge AI

The Edge AI revolution is creating unprecedented career opportunities across multiple disciplines and industries.

Technical Roles

Edge AI Engineers design and implement AI systems for resource-constrained environments, combining expertise in machine learning, embedded systems, and optimization techniques. Average salaries range from $134,188 to $164,499 depending on location and experience.

AI Hardware Engineers develop specialized processors, accelerators, and systems optimized for edge AI workloads. This role requires deep understanding of computer architecture, AI algorithms, and power-efficient design principles.

Edge MLOps Engineers build deployment pipelines, monitoring systems, and management tools for Edge AI applications. This emerging role bridges traditional DevOps practices with the unique challenges of distributed AI systems.

Industry Applications

Manufacturing industries are hiring Edge AI specialists to implement predictive maintenance, quality control, and process optimization systems. Healthcare organizations need experts to deploy AI-powered medical devices and patient monitoring systems while maintaining privacy compliance.

Automotive companies are building teams focused on autonomous vehicle systems, advanced driver assistance, and in-vehicle AI experiences. These roles often command premium salaries due to the safety-critical nature of automotive applications.

Skill Development Pathway

Begin with foundational knowledge in machine learning, computer vision, or natural language processing depending on your target applications. Develop embedded programming skills in C/C++ and Python, with emphasis on resource optimization techniques.

Gain hands-on experience with Edge AI hardware platforms and frameworks through personal projects and online courses. Build a portfolio demonstrating successful deployment of AI applications to resource-constrained devices.

Comprehensive FAQ Section

Edge AI represents more than a technological shift—it’s a fundamental reimagining of how intelligence interfaces with the physical world. With 75% of enterprise data moving to the edge by 2025, organizations that master Edge AI today will lead tomorrow’s intelligent economy.

The convergence of specialized hardware, optimized software frameworks, and mature development tools has created an unprecedented opportunity for developers and organizations to deploy sophisticated AI capabilities directly where data is created. From manufacturing floors detecting equipment anomalies in real-time to wearable devices monitoring health continuously, Edge AI is enabling applications that were impossible just years ago.

Your journey into Edge AI starts with understanding the fundamental trade-offs between local and cloud processing, selecting appropriate hardware for your application requirements, and mastering the optimization techniques that make sophisticated AI models run efficiently on constrained devices. The tools, frameworks, and development platforms discussed in this guide provide everything needed to build production-ready Edge AI applications.

The future belongs to systems that combine the best of both worlds: the computational power of the cloud for training and complex analytics, with the speed, privacy, and reliability of edge processing for real-time intelligence. As you embark on this journey, remember that Edge AI isn’t about replacing human intelligence—it’s about augmenting human capabilities and enabling previously impossible applications.

Start small, experiment frequently, and build progressively more complex systems as your expertise grows. The Edge AI revolution is just beginning, and the opportunities for innovation, career advancement, and positive impact are limitless for those who embrace this transformative technology today.

Leave a Reply