Introduction

This review evaluates “Deal with Mislabeled and Imbalanced Machine Learning Datasets – AI-Powered Course”

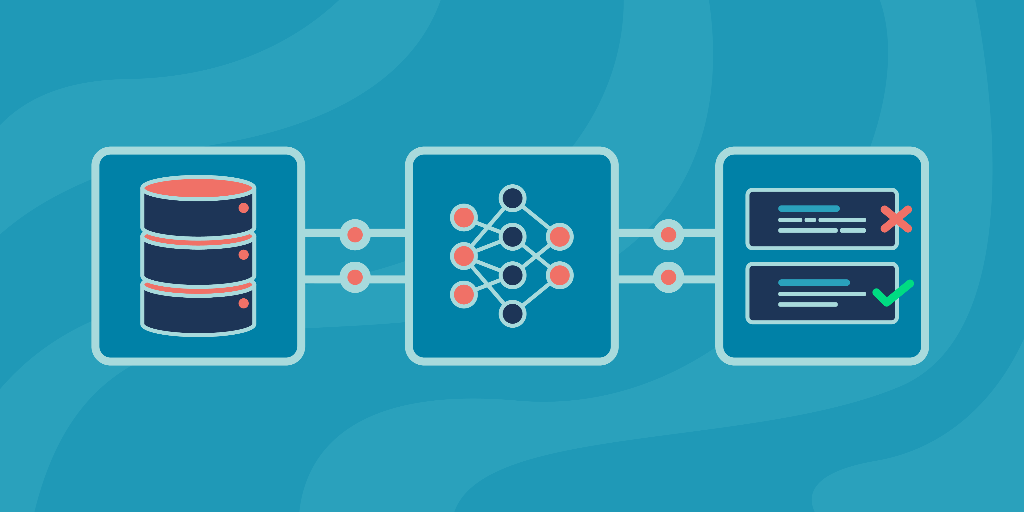

(listed under the provider name “AI Solutions for Mislabeled ML Datasets”), a focused educational product aimed at

helping practitioners identify, measure, and mitigate label noise and class imbalance in real-world machine learning

pipelines. The description promises practical guidance on analyzing effects, measuring and recovering from noise, and

interpreting results to avoid bias. Below you’ll find an objective, detailed assessment to help you decide whether this

course fits your needs.

Product Overview

Manufacturer / Provider: AI Solutions for Mislabeled ML Datasets (course publisher)

Product category: Online technical course / educational resource

Intended use: Teach ML practitioners and researchers how to detect and handle mislabeled examples and class imbalance,

use robust evaluation metrics, apply mitigation techniques (noise filtering, reweighting, sampling methods), and interpret

outcomes to reduce bias.

Appearance, Materials, and Aesthetic

Because this is a digital course, “appearance” refers to the course interface, instructional design, and learning materials.

The course presents a clean, professional aesthetic typical of modern technical courses: a neutral color palette, readable

typography, and clear slide layouts. Materials include a mix of recorded video lectures, slide decks, downloadable Jupyter notebooks,

sample datasets, and short cheat-sheets summarizing key methods.

Unique design features worth noting:

- Concise, modular lessons: material is broken into focused micro-lessons (10–20 minutes each) to lower cognitive load.

- Hands-on labs: downloadable notebooks that can be run locally or in a cloud notebook environment.

- Visual diagnostics: emphasis on easy-to-interpret plots (confusion matrices, calibration plots, noise vs. accuracy curves) to aid intuition.

- Practical checklists: short checklists for dataset auditing and bias checks to use before model training or deployment.

Key Features & Specifications

- Core topics: measuring label noise, noise models, noise-robust loss functions, data cleaning and relabeling strategies, sample reweighting,

class imbalance techniques (resampling, SMOTE and variants, cost-sensitive learning), evaluation metrics robust to imbalance (precision-recall,

balanced accuracy, AUROC / AUPR trade-offs), and model calibration. - Hands-on content: Jupyter notebooks with reproducible examples and sample datasets illustrating noisy and imbalanced scenarios.

- Tools & stack: practical examples using Python, scikit-learn, imbalanced-learn, and common libraries for plotting and evaluation (matplotlib/seaborn).

- Interpretability & bias: sections on interpreting repaired models, healthy skepticism about automated relabeling, and guidelines to avoid introducing bias during remediation.

- Target audience & prerequisites: intended for ML practitioners, data scientists, and researchers with intermediate experience in Python and basic ML (classification).

- Format & duration: self-paced online course with a mix of videos, notebooks, and quizzes. Estimated time investment: several hours to a few days depending on how deeply you engage with exercises.

- Deliverables: downloadable code, sample notebooks, diagnostic templates, and a short reference sheet summarizing recommended workflows.

Experience Using the Course (Scenarios & Practicality)

Scenario 1: Small research dataset with label noise

The course shines when applied to small- to medium-sized research datasets where label noise is suspected. The diagnostic

workflow (visualize label consistency, train noise-aware models, and apply relabeling heuristics) is clear and reproducible.

Notebooks facilitate rapid experimentation: you can test a probabilistic relabeling approach, compare noisy-robust losses, and

see the concrete impact on cross-validated metrics. The course emphasizes caution—never blindly accept automated relabeling—and

provides strategies (human-in-the-loop relabeling on high-uncertainty examples) that are practical in academic settings.

Scenario 2: Production classification pipeline with severe imbalance

For production systems (fraud detection, rare disease diagnosis) the course provides useful strategies: class-weighting in loss

functions, targeted resampling, and careful use of synthetic oversampling (SMOTE) variants. The modules on evaluation metrics are

particularly valuable: they stress precision-recall curves and cost-aware metrics rather than accuracy, and show how calibration

can change downstream decisions. However, while the course gives good conceptual guidance for productionization, it provides limited

step-by-step instructions for integration with existing model training pipelines or distributed data engineering platforms.

Scenario 3: Large-scale datasets and automated pipelines

When scaling to very large datasets, the course’s notebooks may require adaptation: the examples run comfortably on local machines,

but practitioners will need to refactor code for distributed processing (Spark, Dask) or to use sampling strategies to test approaches.

The course does cover algorithmic trade-offs, but it stops short of detailed engineering patterns for CI/CD, automated monitoring,

or incrementally handling label drift in production.

Learning experience & pedagogy

The pedagogical approach balances theory and practice. Short conceptual segments explain why particular techniques work (or fail),

followed by hands-on examples that reproduce the effect on synthetic and real datasets. Quizzes and exercises reinforce key ideas,

and cheat-sheets help retain workflows. The course encourages critical thinking about fairness and bias, which is a strong positive.

Pros

- Focused and practical: specifically addresses common but under-covered real-world problems (label noise and imbalance).

- Actionable materials: reproducible notebooks and diagnostic templates speed up real experimentation.

- Balanced coverage: both measurement/diagnostics and remediation techniques are addressed, including evaluation and interpretability.

- Bias-aware: guidance on avoiding introduction of new biases during relabeling and resampling is integrated into workflows.

- Good for intermediate practitioners: assumes basic ML knowledge and builds to practical solutions quickly.

Cons

- Limited engineering depth: not a full guide to productionizing solutions at scale (e.g., orchestration, distributed training, monitoring).

- Assumes familiarity with Python ML tooling; absolute beginners may find the pace brisk.

- Some methods require human-in-the-loop or domain expertise to execute safely—course explains this but cannot remove the practical overhead.

- Estimated duration and level of hands-on support (mentor access, community) are not clearly stated—learners wanting guided feedback may need to seek additional resources.

Conclusion

Overall impression: This course is a well-targeted, practical resource for data scientists and ML practitioners who face real-world

datasets that are mislabeled and imbalanced. It balances theory and practice with useful code examples, diagnostic visualizations, and

bias-aware remediation strategies. If your work frequently encounters noisy labels or rare classes, this course will likely accelerate

your ability to detect issues and apply sensible fixes.

Who should buy: intermediate ML engineers, applied researchers, and data scientists who want concrete tools and workflows for noisy/imbalanced data.

Who should look elsewhere or supplement: absolute beginners in machine learning, or teams that need a full production engineering playbook for

distributed model pipelines (in which case this course is a valuable complement but not a complete solution).

Final recommendation: Recommended as a focused, practical course on a niche but critical topic. Expect to pair it with organizational practices

(human validation, monitoring) and engineering work to fully deploy the techniques in production environments.

Leave a Reply