Dataset Splits in Machine Learning: A Guide to Train, Validation & Test Sets

Imagine you’re training a machine learning model to predict house prices. You feed it a dataset of 10,000 houses with all their features and sale prices. After training, you test the model on the *same 10,000 houses*, and it achieves 99.9% accuracy. A success? Not at all. You’ve just trained a model that is brilliant at “memorizing” but has likely learned nothing about how to price a new, unseen house.

This is the single most common pitfall for beginners in machine learning. A model’s true performance is not measured by how well it does on data it has already seen, but on its ability to generalize to new, real-world data. To build trustworthy AI, we need a disciplined way to evaluate our models honestly. That discipline is called **dataset splitting**.

This guide will break down one of the most fundamental concepts in all of machine learning: the proper way to split your data into Training, Validation, and Test sets. Understanding the distinct role of each set is absolutely critical for preventing data leakage, tuning your model effectively, and building AI systems that actually work in the real world.

The Golden Rule & The Student Analogy

The entire practice of dataset splitting is built on one golden rule: Never evaluate your model on the same data it was trained on. A model will always have an optimistically biased, and therefore useless, performance score on data it has already “memorized.”

The Exam Prep Analogy: Think of building a model like a student preparing for a final exam.

– The Training Set is the textbook and homework problems. The student can study this material as much as they want to learn the concepts.

– The Validation Set is the collection of official practice exams. The student uses these to check their understanding, see which study techniques work best, and decide when they are ready.

– The Test Set is the final, proctored exam, with questions the student has never seen before. This is the true, unbiased measure of their knowledge.

If the student were to steal the final exam ahead of time and memorize the answers, their perfect score would be meaningless. In the same way, if our model “sees” the test data during training, its performance score is a lie. This is why a disciplined separation is crucial.

The Three Essential Datasets Explained

📚 The Training Set (The Textbook)

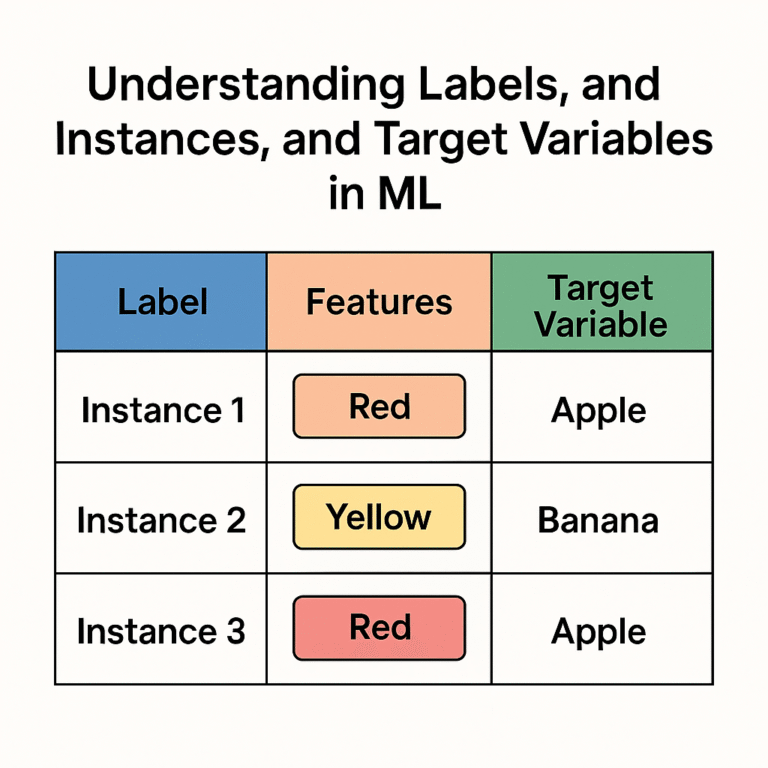

This is the bulk of your data, typically comprising 60% to 80% of your entire dataset. The model uses this data exclusively during the training process to learn the underlying patterns and relationships between the input features and the target variable. The model iteratively adjusts its internal weights and biases to minimize its error on this training set.

📝 The Validation Set (The Practice Exam)

This dataset, typically 10% to 20% of the total, serves a critical purpose: hyperparameter tuning and model selection. After you’ve trained several different models (or one model with different settings), you use the validation set to see which one performs best on data it hasn’t been trained on. It helps you answer questions like:

- Which algorithm should I use (e.g., Random Forest vs. SVM)?

- How many layers should my neural network have?

- What is the optimal learning rate?

You use the performance on the validation set to make these decisions. Because you are using it to tune your model, it is no longer truly “unseen.” This is why a third set is required.

🏆 The Test Set (The Final Exam)

This is the final, held-out portion of your data, also typically 10% to 20%. The test set is sacred. It should be touched only **once**, at the very end of your project, after you have selected and tuned your final model using the training and validation sets.

The performance score on the test set is the true, unbiased estimate of your model’s ability to generalize to new data. This is the number you would report to stakeholders to describe how well your model is expected to perform in the real world.

The “How”: Splitting Data with Python & Scikit-Learn

In practice, this process is made easy with libraries like Scikit-learn. The `train_test_split` function is the industry standard for creating these splits.

Practical Code Example

from sklearn.model_selection import train_test_split

# Assume X contains your features and y contains your labels

# First, split into training and a temporary set (which will become validation + test)

X_train, X_temp, y_train, y_temp = train_test_split(

X, y, test_size=0.3, random_state=42

)

# Now, split the temporary set into validation and test sets

# e.g., 0.5 of the 30% temp set gives 15% validation and 15% test

X_val, X_test, y_val, y_test = train_test_split(

X_temp, y_temp, test_size=0.5, random_state=42

)

print(f"Training set shape: {X_train.shape}")

print(f"Validation set shape: {X_val.shape}")

print(f"Test set shape: {X_test.shape}")A Critical Nuance: Stratified Splitting

For classification problems, especially with imbalanced classes (e.g., a fraud dataset with 99% non-fraud and 1% fraud), a random split might result in one of your sets having very few or zero examples of the minority class. To prevent this, you should use a stratified split. This ensures that the proportion of each class is the same across the train, validation, and test sets.

In Scikit-learn, you can easily do this by adding the `stratify=y` parameter to the `train_test_split` function.

Advanced Validation: K-Fold Cross-Validation

What if your single validation set, chosen randomly, happens to be unusually easy or hard? Your evaluation of the model would be biased. To create a more robust evaluation, we can use K-Fold Cross-Validation.

The Analogy: Instead of one practice exam, the student takes 5 different practice exams (K=5). Their final “practice score” is the average of their performance across all five. This gives a much more reliable estimate of their true knowledge and reduces the chance of being misled by one lucky (or unlucky) practice test.

In K-Fold Cross-Validation, the training data is split into ‘K’ equal-sized folds. The model is then trained K times. In each iteration, one fold is used as the validation set, and the other K-1 folds are used for training. The final performance metric is the average of the results from all K folds.

Frequently Asked Questions

What are good splitting ratios for train, validation, and test?

There is no single magic ratio. Common splits are 80/10/10 or 70/15/15. For very large datasets (millions of examples), the validation and test sets can be a smaller percentage (e.g., 98/1/1), because even 1% of the data is still a very large number of examples to get a robust evaluation.

Why can’t I just use two sets (train and test)?

This is a common but critical mistake. If you tune your model’s hyperparameters using the test set, you are implicitly leaking information about the test set into your model. Your model will become optimized for that specific test set, and your final performance score will be an overly optimistic, biased estimate of how it will perform on truly new data.

What is data leakage?

Data leakage is the accidental exposure of information from outside the training dataset to the model during the training process. Using your test set to choose features or tune hyperparameters is a classic example. It is one of the most serious errors in machine learning because it leads to models that seem to perform brilliantly but fail completely in the real world.